There are no items in your cart

Add More

Add More

| Item Details | Price | ||

|---|---|---|---|

The Complete Architecture Workflow: Image to Video to 3D using ComfyUI

Get Complete Control over your AI workflow!

Learn how to create custom architectural design workflows in ComfyUI—covering text-to-image, image-to-image, image-to-video, and image-to-3D model—and transform your designs into human–AI co-created outputs by leveraging AI’s latent capabilities.

Schedule:

Day 01 11th Sat Oct | 14:00 - 18:00 CET

Day 02 12th Sun Oct | 14:00 - 18:00 CET

Course Level Beginner, Intermediate

Length 8 Hours on 1 Weekend (4+4 Hrs) + Submission

Prerequisites No Prior Experience Required

Software + Tools ComfyUI, ControlNet - Depth & Canny Modules, Flux (Kontext, Dev, Redux, Fill), ControlNet (Depth & Canny), Gemini 2.5 Flash Image (Nano Banana), WAN 2.2, Hunyuan 3D/Sparc3D, Google Earth (Imagery / Screenshots), Rhino3D

Preparatory Session Included.

Audio Language English

After-hours Support Text & Image-based for 30 days after the live event.

Certificate Available upon successful completion of the final assignment.

Recordings Availability Recordings will be available for you with lifetime access.

3% Cashback as Credits

What You’ll Learn?

This workshop is designed for beginners with no prior knowledge of ComfyUI. The aim is to produce precise results of an existing site image or a project image in the form of images, videos, and 3D models, with most of the work carried out locally to ensure security and control.

Here’s what you’ll specifically design:

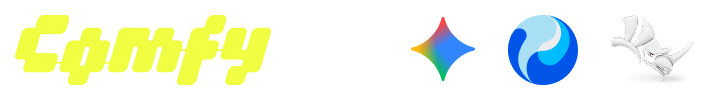

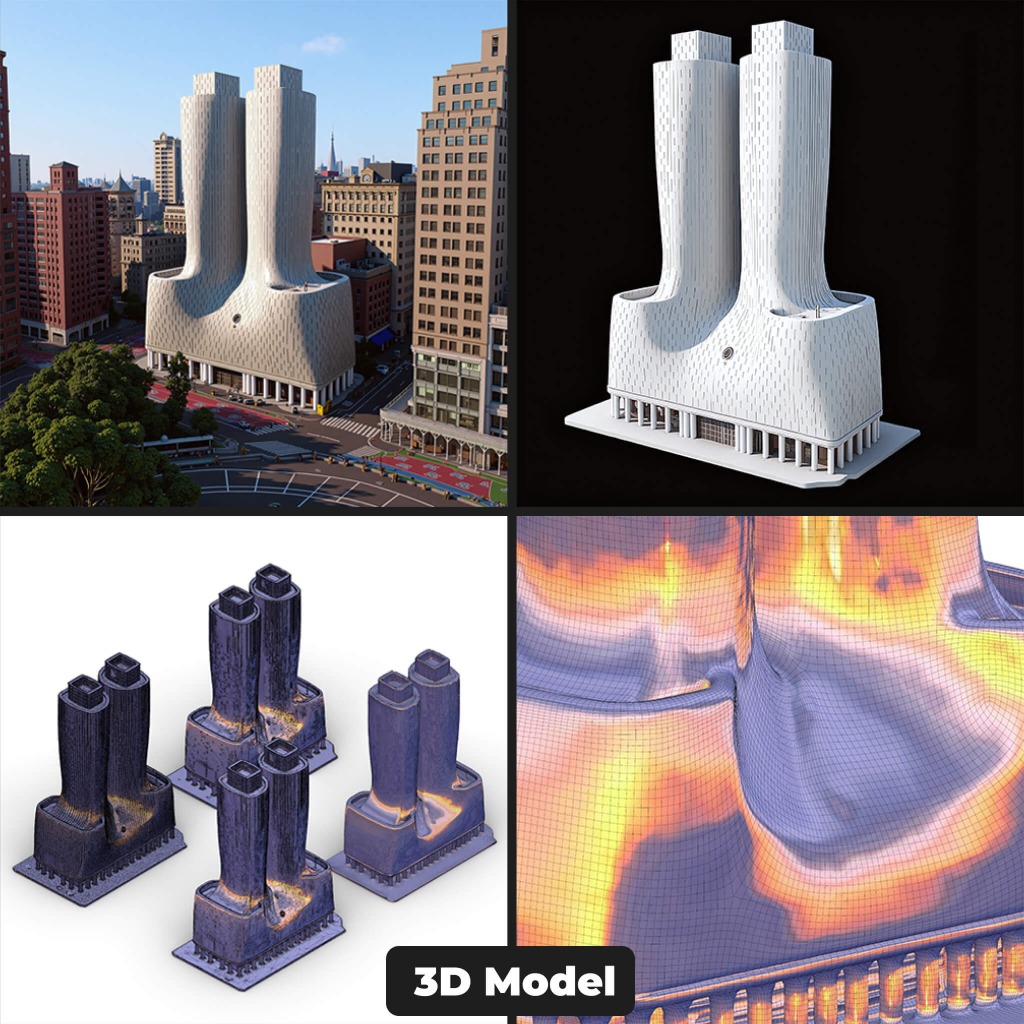

We begin with an introduction to the fundamentals—checkpoint models, latent space, VAEs, and prompt structures—so that participants gain a deeper understanding of how ComfyUI operates beneath the interface. From there, the workflow unfolds in three stages. The first is the image stage, where participants will use ControlNet modules such as Depth and Canny to guide the AI in generating design iterations that respond to time, season, and material conditions. Flux tools will then be applied for style transfer, inpainting, and upscaling to refine the outputs into coherent, detailed visuals.

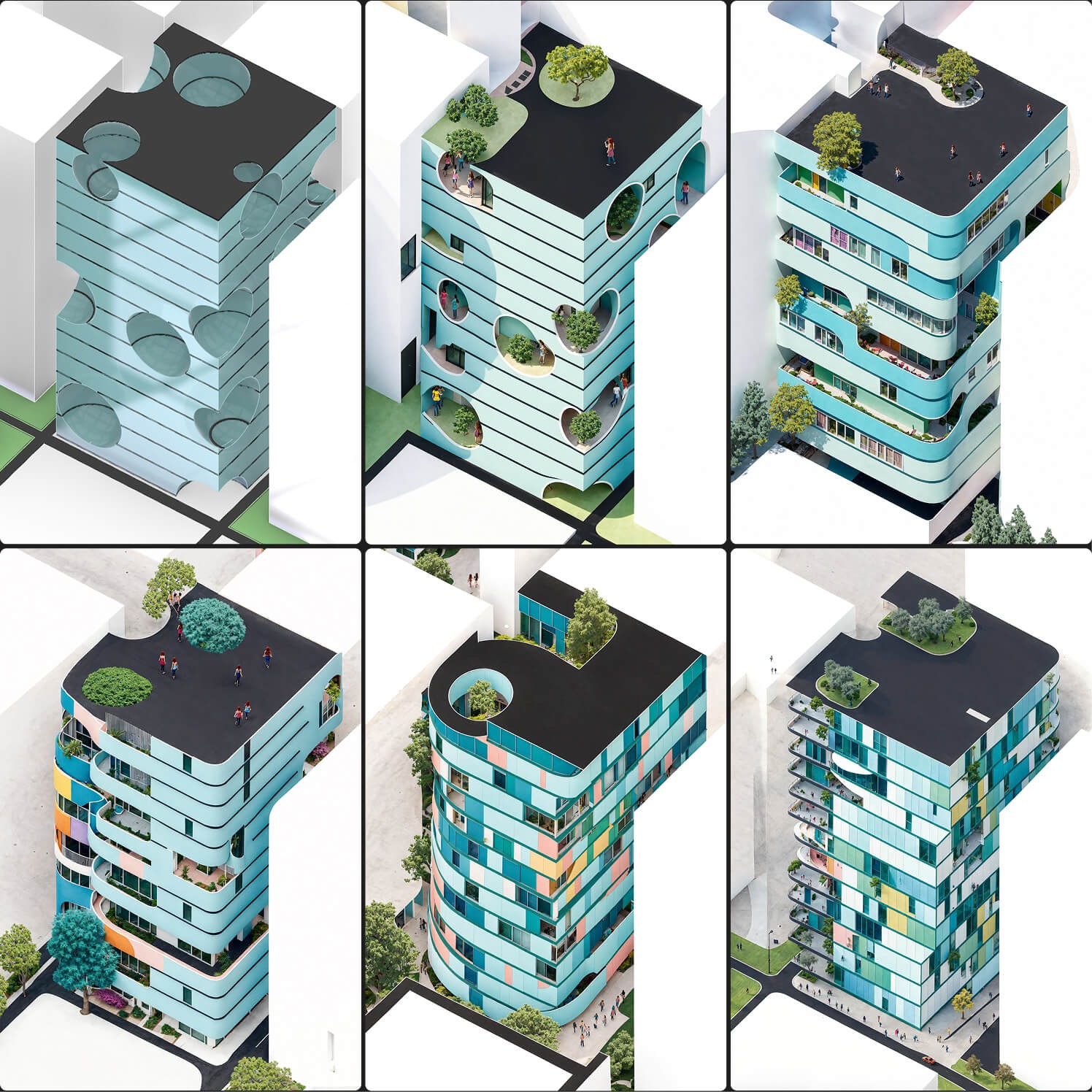

Second is the video stage, where still images will be converted into moving sequences using WAN 2.2. Participants will learn prompt structures, explore model variations, and adjust settings such as frame rate and camera motion to generate videos that add more detail to their designs. Finally comes the 3D stage, where images will be prepared with Gemini 2.5 Flash Image (Nano Banana) for 3D interpretation, then converted through Hunyuan 3D or Sparc 3D. The resulting models will be analyzed, refined, and cleaned in Rhino3D (or another preferred method) to create fabrication-ready geometry. By the end of the workshop, participants will have completed the full cycle—text-to-image, image-to-image, image-to-video, and image-to-3D model—transforming their designs into human–AI co-created outputs by leveraging AI’s latent capabilities.

By the end, participants will have worked through the full cycle—text-to-image, image-to-image, image-to-video, and image-to-3D model—ultimately transforming their designs into human–AI co-created outputs by leveraging AI’s latent capabilities.

Objectives

🟣 Use open-source tools to manage control of creative data.

🟣 Critically thinking about how much AI influence we want in our designs by controlling the inputs.

🟣 Creating a negotiable space between our thinking abilities and the AI’s latent space.

🟣 Learning the language and workflow that AI understands, and identifying where AI’s limitations arise.

🟣 Understanding how small changes in the workflow can drastically alter the output.

🟣 Develop full workflows for realistic outputs across image, video, and 3D.

🟣 Build an iterative design mindset, redefining the role of architects and designers in the AI landscape.

Please Note

Software installation is part of the preparatory video provided a week before the live workshop event.

Participants must have all required software installed before the workshop begins. If you cannot install ComfyUI locally, you may use RunComfy—a platform built around the Stable Diffusion model.

Please review RunComfy’s pricing, as it charges based on server rental time. RunComfy fully supports the models and nodes that we will be working with during the workshop.

Images Courtesy: Kedar Undale

INSTRUCTOR,

Architect, Computational & AI Designer,

Kedar Undale Design Studio

Spain / India

Kedar is an Architect and Computational & AI Designer at Kedar Undale Design Studio, where he specializes in consulting, education, and creating art through parametric design. He holds a master’s degree from IAAC, Barcelona, and has worked as a Computational Designer with the Toronto-based firm Partisans. Kedar helps architects and designers realize complex, form-driven projects by translating conceptual ideas into executable solutions.

Over the past five years, he has conducted global workshops on ‘Parametric Thinking’ at institutions such as DigitalFUTURES, InterAccess Canada, PowrPlnt New York, and CEPT India. With the rise of generative AI, he has developed custom workflows and led training sessions for organizations and faculty development programs including PAACADEMY, UID Gujarat, and KLS GIT Belagavi. His artistic practice, featured in Homegrown Magazine India, leverages computational tools to visualize intangibles such as sound, wind, and gravity.

Yes, you'll receive a certificate of completion for the registered workshop, provided you complete the final assignment and it is approved.

This course includes lifetime access to all content for as long as the Futurly platform exists. Instructor support is only available for a limited time after purchase. For details, see our Lifetime Access Policy.

Group & Team Discounts: Special pricing is available for groups of 5 or more. Email us at hello@futurly.com with the number of participants, and we’ll get back to you with a custom offer.

Institutional Discounts: If you represent an institution and wish to enroll 10 or more of your students, faculty, or staff, reach out to hello@futurly.com for special pricing.

If you are a Futurly+ member, you get 30% of your membership purchase amount back as Credits, which you can use to purchase standalone courses and workshops, such as this one, with up to a 30% discount on your cart's value. Feel free to use your accumulated Credits at checkout to get a members' special discount. You can learn more about the membership here.

Yes, watch the preparatory video provided a week before the live event and follow the instructions to make sure you make the most of the workshop.

Please drop us a mail about this at hello@futurly.com and give us a clear explanation of your situation, we'll do our best to make something work for you.

You can use the Futurly Dashboard to comment on the video and get your doubts clarified by the instructor. You may also post images to explain your doubts further.

There won't be refunds. However, in special circumstances, the registration shall be transferable to another individual, before the launch of the Workshop/series.

We currently accept payments through all major Credit & Debit Cards. We also support custom PayPal Registration Link / Crypto Payments in special circumstances. Send us an email to hello@futurly.com with your name & course details, in case you're having trouble with payments and we will take it from there.

We'll do our best to solve your problem, drop us an e-mail here. Or reach out to us directly using the chat button on this page.